Compare The Cost of AWS Lambda, Fargate, and EC2 For Your Workloads

Choosing the AWS compute platform for your workload in terms of cost is not an easy task. I did the calculation for one of the projects I work on and decided to share my findings. In this article, I will do a very high-level and simplified comparison to outline a way to make the comparison.

Being not an expert in utilizing all compute options, I encourage you to use the comment section to correct my wrong assumptions or suggest improvements and ideas that should be incorporated into the comparison effort.

Configuration Setup

Using AWS Pricing Calculator, we can compare the monthly cost of services of roughly equivalent setups in computing power. I found already this to be a tricky task to do. Each service has its own type of configuration to choose from, having different specifications not just for vCPUs and memory, but also for limitations to the network bandwidth, having restrictions to use GPUs or EBS volumes, and so on.

To keep things simple, let us compare services with requirements just for having available 1 vCPU and about 2 GB of memory. For Lambda, vCPU scales together with the configurable memory size. Lambda gets 1 vCPU per 1.769 GB of memory, so this will be our pick for the Lambda. Fargate has configurable both vCPU count and memory size, so we can pick exactly 1 vCPU and 2 GB of memory to be available. For EC2, for such a low value of vCPU and memory in the current generation of the EC2 instance types, our only option is to go with the T family that has a burstable performance. This brings another level of complexity as the instance is priced based on utilization below or equal to baseline CPU performance, and additional charges occur when consumption exceeds the baseline. However, in the previous generation instance types, the m1.small instance type fits the requirements pretty well with 1 vCPU and 1.7 GB of memory so we will use it rather than the T family instance type. Additionally, the following options were not considered in comparison for the sake of simplicity:

- ARM architecture CPUs

- Non-Linux OS

- Spot instances and spot Fargate tasks

- Data transfer and storage

- Reserved instances and compute savings plans

Cost Comparison

Intuitively, EC2 should be the least expensive service, followed by Fargate and Lambda. The reasoning is that each next service requires less infrastructure administration than the previous one, leaving infrastructure management tasks to AWS. The following graph shows the monthly price of each service based on a percentage of time utilization.

EC2 pricing is indeed the lowest one, followed somewhat closely by Fargate, while Lambda pricing is about double of Fargate. EC2 pricing could be lowered further down by utilizing the T family burstable instance type, depending on the actual needs of your workload.

Billing And Startup Factors

As we saw above, the cost of running Lambda is pretty high in comparison to Fargate and EC2. Let us now examine factors that can affect why we can still choose Lambda for computing despite its higher price.

The first thing to consider is the billing interval of the services. EC2 is billed per second, with a minimum of 60 seconds duration. Billing starts when the instance is launched and stops when the instance is terminated or stopped. Fargate is billed per second, as well with a minimum of 60 seconds duration. Billing starts when the image is pulled and stops when the task terminates. Lambda, on the other hand, is billed per millisecond with no minimum threshold. Billing starts when your initialization or handler code starts running and stops when your code returns or otherwise terminates.

This is one of the reasons why Lambda is a good choice for workloads that are executed infrequently and are short-lived.

Secondly, spinning up a new EC2 instance takes about a minute or more, similar to a new Fargate task startup. In comparison, creating a new Lambda instance takes usually a few hundred milliseconds for runtimes with interpreted languages and up to a few seconds for runtimes with compiled languages.

Depending on your workload, it can be the case that you can not wait a minute to start an EC2 instance or Fargate task, and thus need to keep the instance or task running. This is not the case for Lambda, as you generally wait seconds to start a new instance. You can also keep the Lambda instance warm for the diminutive price by pinging it each few minutes by CloudWatch event.

To underline the two factors described above, we can now compare the billing of services for a web API handler type of computing, where we want to have no startup delay and be ready to serve traffic at any time.

Both the EC2 instance and Fargate task need to be utilized 100 % of the time to not suffer from startup issues. Lambda billing on the other hand depends on the actual time when your handler code is running to serve the traffic to the web API. Thus, utilizing Lambda is preferable until Lambda is utilized about 40 to 50 % of the time, despite its higher price for the same computing power. This makes Lambda suitable for fast processing tasks, for example, IO tasks such as reading data from DynamoDB, and so on.

Real World Example

As we saw above, the cost of running Lambda is pretty high in comparison to Fargate and EC2. Let us now examine factors that can affect why we can still choose Lambda for computing despite its higher price.

We utilize the Lambda function on one of our projects to handle API requests. It has a memory configuration of 2 GB mainly to minimize cold-start delays, otherwise, it could have a lower configuration. It uses a .NET library that has over 4 MB, so cold start differences between e.g. 512 MB of memory and 2 GB are noticeable.

The traffic to Lambda was steadily increasing over the last months, and we want to find out whether it is time to consider migration to another service.

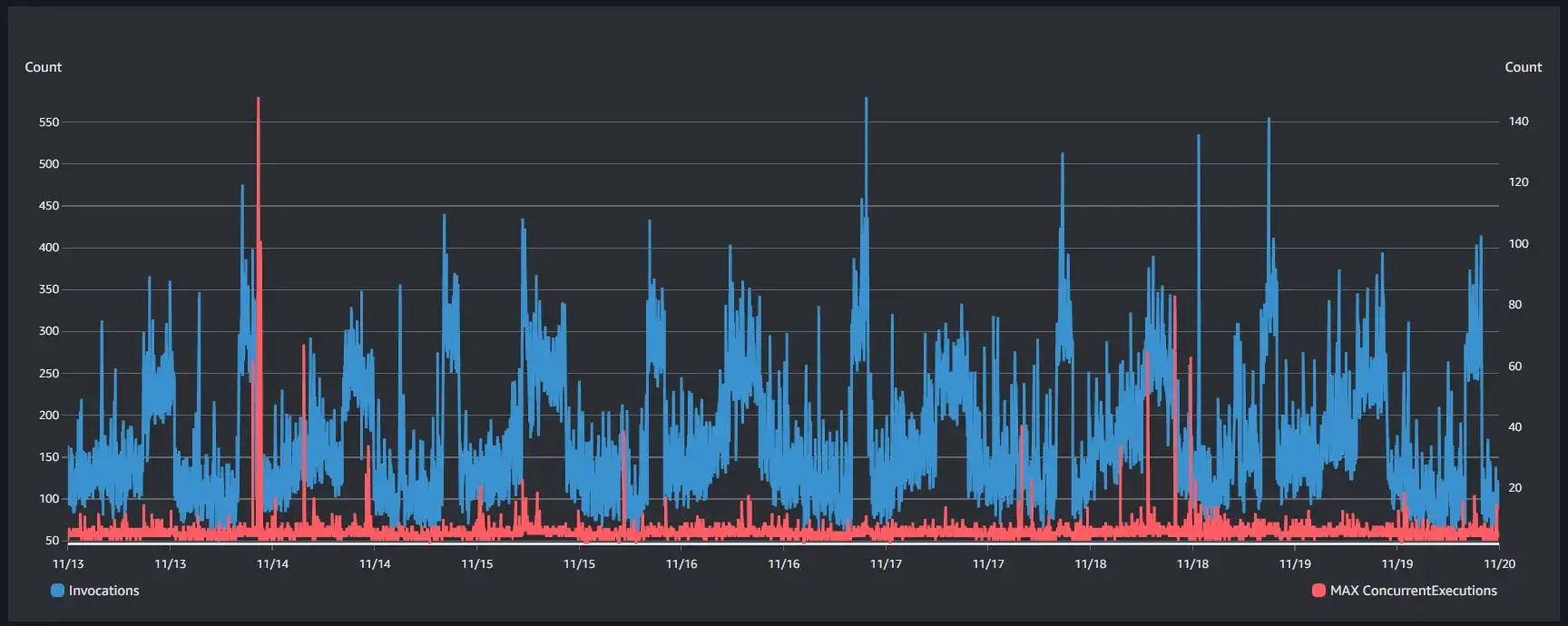

According to the daily utilization metrics, the function is invoked on average about 250k times per day, which is 2.89 requests per second. The average execution duration is somewhere around 250 ms. 2.89 times 250 is approximately 723. This means that the function is utilized roughly 70 % of the time.

More granular one-minute interval metrics show that invocation patterns are spiky, however in general the load is handled by little less than 10 concurrent instances of the function.

Collected metrics signal that we have passed the threshold where Lambda billing was optimal. Potentially, to handle traffic with the same computing power and have room to grow, we could utilize e.g. 2 multi-AZ Fargate tasks, each having 0.5 vCPU and 1 GB of memory. Based on the graphs above, this would reduce monthly billing costs from $53.50 to $36.04. We could attempt to lower the costs further down by utilizing rightly-sized burstable EC2 instances and hosting the container or app there. This is of course only a rough theoretical idea that would require verification on the real application.

As a closing note, I would like to mention that raw billing costs should be extended by consideration of complexity and infrastructure management costs. For specific scenarios, it might mean that even though e.g. EC2 billing costs could be a few percent lower than Fargate or Lambda, the difference is yet not making up for the added complexity of the solution.

This piece was written by Milan Gatyas, .NET Tech Lead of Apollo Division. Feel free to get in touch.

[26/01/2023] Agile estimations: how we defined our own best practice

Have you ever struggled to understand how to estimate tasks in an agile project?

Read the Insight